The write performance of a disk is influenced by the physical properties of the storage device (e.g. the physical rotational speed in revolutions per minute in case of a mechanical disk), the disk I/O unit to I/O request size ratio and the OS/application.

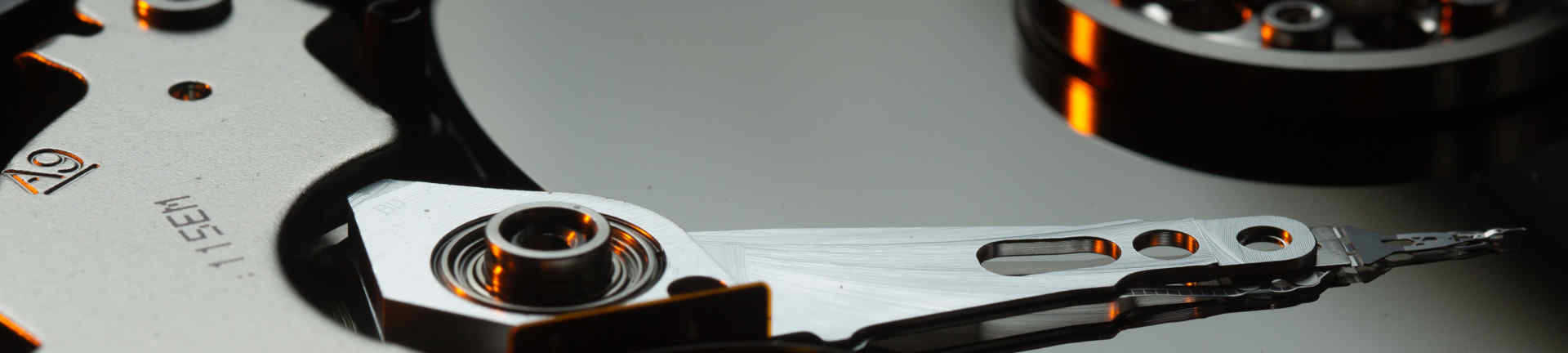

Physical properties of an HDD

One major drawback of traditional mechanical disks is that, for an I/O request to be honored, the head has to reach the desired starting position (seek delay) and the platter has to reach the desired starting position (rotational delay).

This is true for sequential and random I/O. However, with sequential I/O, this delay gets considerably less noticeable because more data can be written without repositioning the head. An “advanced format” hard disk has a sector size of 4096 bytes (the smallest I/O unit) and a cylinder size in the megabyte-range. A whole cylinder can be read without repositioning the head. So, yes, there’s a seek and rotational delay involved but the amount of data that can be read/written without further repositioning is considerably higher. Also, moving from one cylinder to the next is significantly more efficient than moving from the innermost to the outermost cylinder (worst-case seek).

Writing 10 consecutive sectors involves a seek and rotational delay once, writing 10 sectors spread across the disk involves 10 counts of seeks and rotational delay.

In general, both, sequential and random I/O involve seek and rotational delays. Sequential I/O takes advantage of sequential locality to minimize those delays.

Physical properties of an SSD

As you know, a solid-state disk does not have moving parts because it’s usually built from flash memory. The data is stored in cells. Multiple cells form a page - the smallest I/O unit ranging from 2K to 16K in size. Multiple pages are managed in blocks - a block contains between 128 and 256 pages.

The problem is two-fold. Firstly, a page can only be written to once. If all pages contain data, they cannot be written to again unless the whole block is erased. Assuming that a page in a block needs to be updated and all pages contain data, the whole block must be erased and rewritten.

Secondly, the number of write cycles of an individual block is limited. To prevent approaching or exceeding the maximum number of write cycles faster for some blocks than others, a technique called wear leveling is used so that writes are distributed evenly across all blocks independent from the logical write pattern. This process also involves block erasure.

To alleviate the performance penalty of block erasure, SSDs employ a garbage collection process that frees used pages that are marked as stale by writing block pages excluding stale pages to a new block and erasing the original block.

Both aspects can cause more data to be physically read and written than required by the logical write. A full page write can trigger a read/write sequence that is 128 to 256 times larger, depending on the page/block relationship. This is known as write amplification. Random writes potentially hit considerably more blocks than sequential writes, making them significantly more expensive.

Disk I/O unit to I/O request size ratio

As outlined before, a disk imposes a minimum on the I/O unit that can be involved in reads and writes. If a single byte is written to disk, the whole unit has to be read, modified, and written.

In contrast to sequential I/O, where the likelihood of triggering large writes as the I/O load increases is high (e.g. in case of a database transaction log), random I/O tends to involve smaller I/O requests. As those requests become smaller than the smallest I/O unit, the overhead of processing those increases, adding to the cost of random I/O. This is another example of write amplification as a consequence of storage device characteristics. In this case, however, HDD and SSD scenarios are affected.

OS/application

The OS has various mechanisms to optimize both, sequential and random I/O.

A write triggered by an application is usually not processed immediately (unless requested by the application by means of synchronous/direct I/O or a sync command), the changes are executed in-memory based on the so-called page cache and written to disk at a later point-in-time.

By doing so, the OS maximizes the total amount of data available and the size of individual I/Os. Individual I/O operations that would have been inefficient to execute can be aggregated into one potentially large, more efficient operation (e.g. several individual writes to a specific sector can become a single write). This strategy also allows for I/O scheduling, choosing a processing order that is most efficient for executing the I/Os even though the original order as defined by the application or applications was different.

Consider the following scenario: a web server request log and a database transaction log are being written to the same disk. The web server write operations would normally interfere with the database write operations if they were executed in order, as issued by the applications involved. Due to the asynchronous execution based on the page cache, the OS can reorder those I/O requests to trigger two large sequential write requests each. While those are being executed, the database can continue to write to the transaction log without any delay.

One caveat here is that, while this should be true for the web server log, not all writes can be reordered at will. A database triggers a disk sync operation (fsync on Linux/UNIX, FlushFileBuffers on Windows) whenever the transaction log has to be written to stable storage as part of a commit. Then, the OS cannot delay the write operations any further and has to execute all previous writes to the file in question immediately. If the web server was to do the same, there could be a noticeable performance impact because the order is then dictated by those two applications. That is why it’s a good idea to place a transaction log on a dedicated disk to maximize sequential I/O throughput in the presence of other disk syncs / large amounts of other I/O operations (the web server log shouldn’t be a problem). Otherwise, asynchronous writes based on the page cache might not be able to hide the I/O delays anymore as the total I/O load and/or the number of disk syncs increase.